Agentic AI : Redefining the Future of Cybersecurity

Table of Contents

Let’s examine what cybersecurity will look like in 2026 and why, as agentic AI advances, conventional tools are becoming outdated. Will agent-based AI ever replace traditional cybersecurity tools?

The landscape of digital defence is undergoing the most radical transformation in decades. For twenty years, the industry was built on the assumption that humans were the primary operators of security tools, with the final say on detection, investigation, and response. The emergence of agentic AI, or artificial intelligence capable of autonomous execution and decision-making, is expected to completely disrupt this model.

1. Defining Agentic AI

The primary difference between AI we’ve seen in recent years (such as standard Large Language Models or Generative AI) and Agentic AI is in autonomy and execution. While traditional generative AI produces text or code, agentic AI is designed to complete complex, multi-step tasks with minimal human intervention.

Agentic AI possesses several unique characteristics:

- Autonomy: It can manage entire workflows rather than just responding to prompts.

- Adaptability: These systems can adapt to new, unexpected scenarios and learn from them.

- Real-time decision-making allows agents to navigate dynamic environments like a live cyberattack, or a complex software migration.

2. The End of Traditional Cybersecurity Tooling

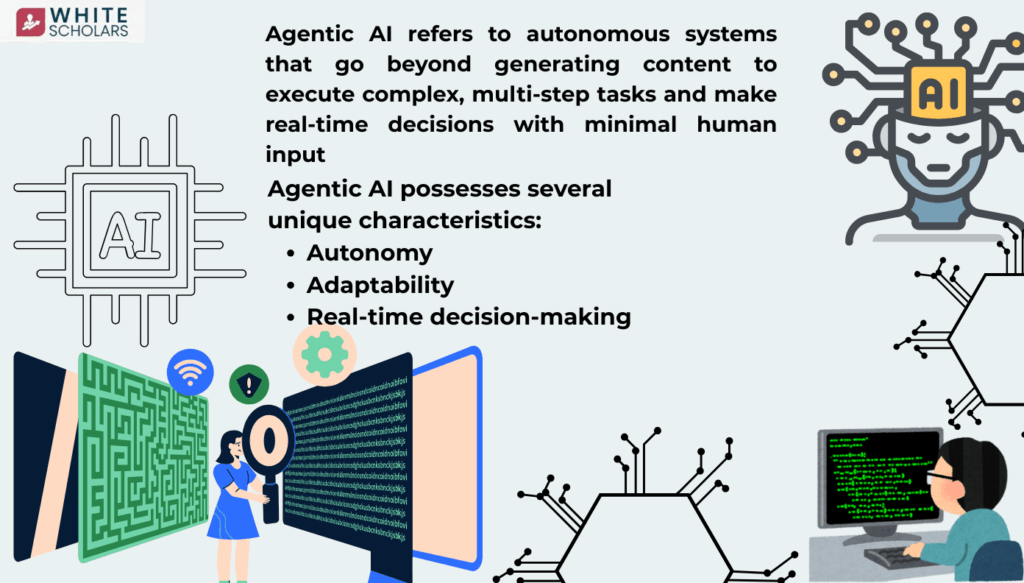

For two decades, cybersecurity vendors provided tools such as Static Application Security Testing (SAST), vulnerability scanners, and penetration testing suites, with the expectation that a human would initiate the investigation and corroborate the findings. This model is described as static, with tools generating alerts and humans performing actions.

By 2026, agentic AI will have eliminated these traditional tools. The transition entails moving from a “human-tool” to a “agentic layer” relationship.

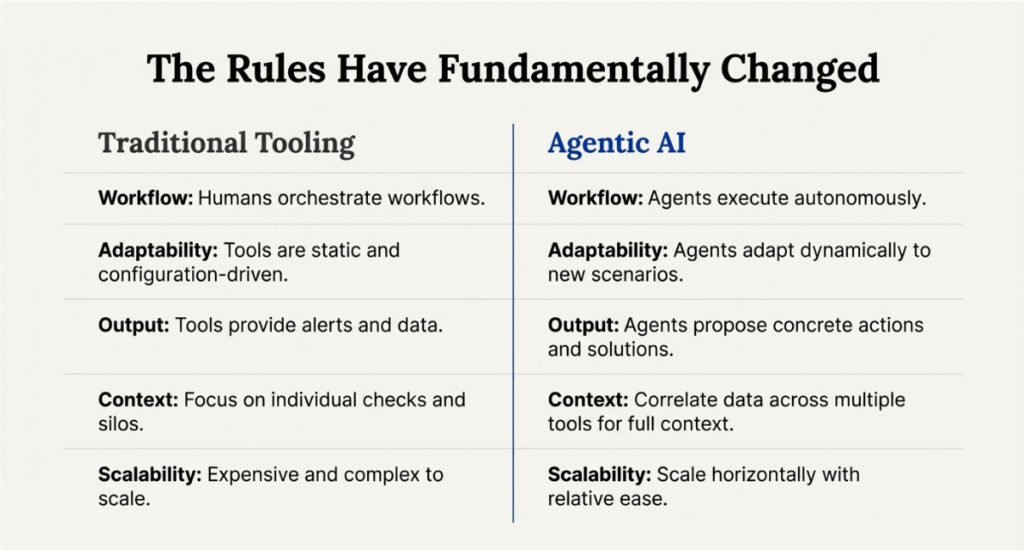

- Orchestration: Previously, humans orchestrated workflows; now, agents do so themselves.

- Static vs. Dynamic: Traditional tools remain static, whereas agents adapt to their surroundings.

- Contextual Intelligence: Rather than looking at individual checks, agents can use APIs to correlate data and gain full context.

- Scaling: Traditional tools are often costly and difficult to scale due to licencing and human resource constraints, whereas agents scale horizontally much more efficiently.

3. “Security Engineer as Software”: Real-World Applications

The introduction of frontier autonomous agents represents a significant development in this field. These are not just co-pilots, but security engineers in software, capable of performing high-level professional tasks without human intervention.

These agents are now able to,

- Architecture and design reviews involve evaluating product specifications and technical designs for security risks during the planning phase.

- Autonomous Penetration Testing: Configuring and running penetration tests independently to identify vulnerabilities.

- Code reviews involve checking code for security flaws during the development cycle.

- Incident Response: Both AWS and CrowdStrike are mentioned as pioneers in integrating AI agents into incident response services to handle “heavy lifting” such as log analysis and correlation.

CrowdStrike is expanding into agents trained on the collective knowledge of expert security analysts, with the goal of handling tasks traditionally reserved for Tier 1 (L1) analysts.

4. The Agentic Layer, a New Architectural Standard

The Agentic Layer, which serves as a bridge between human analysts and security tools, is central to the future of cybersecurity architecture. In this new model, the security analyst no longer works directly with a dozen different dashboards (SIEM, DLP, scanners, and so on). Instead, the analyst communicates with a security agent, which manages the underlying tools using APIs.

This transition is aided by developments such as the Model Context Protocol, which enables agents to communicate across multiple tooling ecosystems. As these agents become more intelligent, they will take over the “grunt work” of cybersecurity, freeing up humans to focus on higher-level supervision.

5. How Agentic AI DIffers from generative AI

The differences can be categorised into four major areas:

1. Autonomy vs. Assistance

Traditional generative AI frequently functions as a “co-pilot” or chatbot, requiring a human to initiate each step of the process. In contrast, Agentic AI is referred to as a security engineer as software.

It does more than just assist; it is self-sufficient, capable of managing entire workflows and learning from experience to adapt to new situations. For example, while a traditional tool may notify a human of a vulnerability, an agentic AI system can conduct penetration testing, code reviews, and architecture analysis without human intervention.

2. Automation of Workflows

In a traditional setup, humans are the central authority in charge of orchestrating workflows, which includes writing detection rules, initiating investigations, and coordinating data between various tools.

• Traditional Tools: Expect humans to manage the process, and they are often static in their function.

• Agentic AI: Does the orchestration itself. It can interact with multiple tools at the same time using APIs (such as the Model Context Protocol) to correlate data and gain a complete picture of a situation.

3. Actions vs. Alerts

These systems produce a fundamental shift in output. Traditional cybersecurity tools are designed to generate alerts that must be investigated and resolved by humans. In contrast, agentic AI performs actions.

It progresses from identifying a problem to performing the “heavy lifting” of incident response, such as log analysis and correlation, in order to resolve the issue.

4. Scalability and adaptation.

Traditional tools can be difficult and costly to scale due to complex licencing and the need for human operators. Agentic AI can scale horizontally more effectively. Furthermore, while traditional generative tools are relatively fixed in their training, agents are designed to adapt dynamically to the environment in which they operate, making them significantly more disruptive to the traditional concept of static security tooling.

6. Security Strategies for Agentic AI

Agentic AI is extremely efficient, but it also carries significant risks. Businesses must use the following five key strategies to secure these autonomous systems:

Authentication and authorisation

Every AI agent must have a distinct identity. Strict access controls are essential; agents should only be allowed to interact with systems that they have explicit permission to access. Robust identity management ensures that an agent’s actions can be traced back to a specific origin.

Output Validation

Artificial intelligence outputs cannot be blindly trusted. Because these systems can still make mistakes, their decisions must be validated before being used in a live environment.

Sandboxing

Ideally, agents should be tested in controlled environments known as sandboxes. Running autonomous agents on live, mission-critical systems without prior testing may expose the company to significant operational risks.

Transparent Logging

Every action taken by an AI agent, including decisions, API calls, and modifications, must be logged. This ensures accountability and enables human teams to conduct “post-mortems” if an agent commits an error.

Continuous Testing and Monitoring

AI security must be just as dynamic as the AI. This includes regular “red teaming” (simulated attacks) and penetration tests designed to identify vulnerabilities in the AI agents themselves.

7. The Shifting Skills Gap: Thriving vs. Dying Skills

As agentic AI takes over the “monotonous” aspects of security, human professionals’ career paths will shift dramatically.

Skills in Decline (At Risk of Replacement):

- Manual Log Correlation: Sifting through logs to look for patterns.

- Basic penetration testing involves pointing a scanner at an IP address and reading the results.

- Writing Detection Rules: Manually creating SIEM rules.

- Static Compliance: Checklist-based and manual code reviews.

- Administrative tasks include opening Jira or ServiceNow tickets and sending manual email updates.

Skills in Demand (Future of Industry):

future skills required to thrive in this autonomous era

1. AI Supervision and Responsible Oversight

This skill entails monitoring the performance and ethics of autonomous agents to ensure that they are operating within defined parameters and making sound decisions.

Supervision is now about evaluating rather than doing the work. Because Agentic AI can perform multi-step tasks and make real-time decisions with minimal input, the professional must keep the agent on track. This includes:

- Operational Oversight: Ensuring that the agent navigates the workflow correctly and does not get stuck in logic loops.

- Ethical auditing involves looking for biases that the agent may have inherited from its training data, which could lead to poor or discriminatory security decisions.

- Performance Metrics: Determining what “success” means for an autonomous agent and intervening when those metrics are not met.

2. Agentic AI Security Threat Modelling

Traditional threat modelling considers how an attacker might exploit a software application or a network protocol. The future requires agentic AI security threat modelling, which investigates the various attack vectors introduced by autonomous agents.

Understanding that the agent is an attack surface is essential in today’s threat modelling. Security professionals should be able to map out:

- Prompt Injection and Manipulation: Identifying how an attacker could “trick” an agent into taking unauthorised actions or leaking data.

- Data Poisoning: Understanding how malicious input can disrupt the agent’s learning process.

- Agent Logic Flaws: Identifying flaws in how an agent interprets complex instructions or architectural documents.

- Escalation Paths: Identifying how an attacker could progress from compromising a single agent to gaining control of the larger “agentic layer” that manages multiple tools.

3. Identity Governance for Non-Human Identities

In an agentic environment, each autonomous agent needs its own identity to access systems, call APIs, and change configurations. This results in a significant shift from managing human users to managing nonhuman identities.

Identity governance will be one of the most important security pillars. Professionals must develop skills in:

- Identity Provisioning: Each agent is assigned a unique, traceable origin to ensure accountability for their actions.

- Least Privilege Management: Ensuring that agents only interact with systems that they have explicit permission to access, thereby preventing agent sprawl, in which autonomous software gains excessive permissions.

- Credential management involves safeguarding the API keys and secrets that agents use to communicate with other tools such as SIEMs, scanners, and cloud environments.

- Social Engineering Defense: Creating strategies to prevent non-human identities from being manipulated by attackers who treat agents as human users in order to gain access.

4. Interpreting Agent Output and Forensic Log Analysis

While agents will handle the “grunt work” of log correlation, humans will still need to interpret the agent’s output to ensure accuracy. This necessitates a thorough technical understanding of the underlying logs, as well as the ability to conduct forensics on the agent’s logic.

Professionals will need to act as “quality control” for AI decisions. This includes the following:

- Decision Verification: Examining the logs to determine why an agent blocked a specific IP or flagged a piece of code as a risk.

- Error Detection: Detecting when an agent misinterprets a task, potentially creating a new vulnerability while attempting to fix another.

- Cross-Tool Validation: Understanding how an agent collects data from various sources (such as SAS, DAS, and scanners) and determining whether the correlation is logically sound.

5. Designing Security Guardrails

As agents take on more autonomous tasks, it is the security professional’s responsibility to design the safeguards that limit what the agent can do without human approval. This includes developing a framework for “human-in-the-loop” (HITL) security.

Guardrail design is an architectural skill that requires a careful balance of efficiency and safety.

- Threshold Setting: Choosing which actions an agent can perform autonomously (e.g., blocking a known malicious IP address) and which require human intervention (for example, shutting down a production server).

- Sandboxing Architectures: Creating isolated environments in which agents can be tested and validated before interacting with live systems.

- Automated Policy Enforcement: Creating rules to prevent an agent from violating corporate security policies or compliance standards while performing autonomous workflows.

6. Cloud-Native Architecture Mastery

Agentic AI is primarily “running in the cloud only.” As a result, a thorough understanding of cloud-native architecture is no longer optional; it is an essential prerequisite for managing agents.

Security professionals must understand the infrastructure that supports these agents. This includes:

- Microservices and Containers: Understanding how agents interact in containerised environments.

- API Security: Understanding the protocols (such as the Model Context Protocol) that enable agents to communicate with various tools across the cloud ecosystem.

- Serverless Security: Managing agents that may perform serverless functions or exist in temporary cloud environments.

7. GRC Engineering and AI Red Teaming

The future of Governance, Risk, and Compliance (GRC) is moving away from manual checklists and towards GRC Engineering, which incorporates compliance into automated agentic workflow. Simultaneously, penetration testing is evolving into AI red teaming

- AI red teaming is the process of simulating attacks on AI models and agents in order to detect flaws in their decision-making or security controls. It is far more advanced than simply pointing a scanner at an IP address, which is a skill in decline.

- GRC Engineering: Rather than static compliance checking, professionals will create systems in which agents autonomously verify compliance in real time throughout the development process.

Comparison: Skills in Decline vs. Skills in Demand

We can classify the shift as follows:

| Declining (Monotonous) Skills | Rising (Strategic) Skills |

| Manual Log Correlation | AI Supervision & Monitoring |

| Basic Penetration Testing/IP Scanning | AI Red Teaming & Agentic Threat Modelling |

| Static Compliance/Checklist Checking | GRC Engineering & Guardrail Design |

| Manual Code Review | Designing Sandboxed Testing Environments |

| Ticketing (Jira/ServiceNow) & Admin Tasks | Non-Human Identity Governance |

| Writing Manual Detection Rules | Interpreting Agent Logic & Decisions |

Why Take Course at WhiteScholars

- Industry-relevant Curriculum: The program combines data science training in Hyderabad with cutting-edge agentic AI applications to teach students both fundamental and advanced skills.

- Hands-on Learning: Unlike purely theoretical programmes, this data scientist course in Hyderabad emphasises real-world projects, case studies, and simulations.

- Expert Mentorship: As a leading data scientist institute in Hyderabad, White Scholars provides personalised guidance from experienced trainers.

- Career Transition Support: Ideal for commerce, engineering, or non-technical graduates looking to gain industry experience through structured data scientist training in Hyderabad.

- Placement Assistance: The institute offers mock interviews, resume workshops, and job placement services, making it one of the most reliable choices for a data science course in Hyderabad.

8. Ethical and Systemic Risks

The autonomy of agents poses ethical and systemic risks. Nicole Caron of Darktrace warns that these agents may inherit biases from their training data, which could lead to incorrect or discriminatory security decisions. Furthermore, if an agent misinterprets a task, it may unintentionally introduce a new vulnerability while attempting to fix another.

Agents also face the threat of social engineering. Attackers may try to manipulate these “non-human identities” in the same way they do humans, using prompt injection or other techniques to trick an agent into granting unauthorised access or leaking data.

While there is an excellent overview of the technical shift towards agentic AI, there are several larger industry contexts to consider:

- The “Shadow AI” Challenge: Similar to Shadow IT, many organisations are now dealing with Shadow AI, in which employees or departments deploy autonomous agents without the knowledge or approval of the central security team. This highlights the importance of identity governance.

- Regulatory Compliance (EU AI Act): In regions such as Europe, the use of autonomous agents in critical infrastructure (including cybersecurity) may soon be classified as high-risk under AI regulation.

- The Speed of Offensive AI: It’s worth noting that attackers also use agentic AI. While there is a primary focus on defence (AWS, CrowdStrike), the “break” in cybersecurity tools is also caused by the inability of traditional tools to keep up with the speed of AI-driven attacks, which can mutate and adapt in real time.

Final Thought

The future of cybersecurity does not involve a choice between humans and machines, but rather a shift towards human-augmented autonomy. Agentic AI is not intended to replace a human security professional’s strategic and creative abilities; rather, it is intended to relieve them of mundane tasks.

As the industry approaches 2026, the successful security professional will be the one who stops fighting the tools and instead supervises the agents. The future belongs to someone who not only uses a tool but also designs, secures, and supervises an autonomous army of agents to complete tasks on a scale and speed that no human could ever match. To thrive in 2026 and beyond, you must stop being the operator and become the machine’s governor.

Frequently Asked Questions

1. What does the Agentic AI course cover?

The Agentic AI course combines traditional data science training in Hyderabad with modern AI concepts such as autonomous agents, generative AI, and machine learning. It teaches students how to solve complex business problems with intelligent systems, making them highly employable in today’s AI-driven market.

2. Who should take this course?

This data scientist course in Hyderabad is ideal for graduates, working professionals, and career changers looking to gain expertise in AI and data science. Even those without prior coding experience can benefit because the course begins with fundamentals and progresses to advanced applications.

3. What makes White Scholars stand out among institutes?

White Scholars is widely regarded as the best data scientist institute in Hyderabad due to its hybrid learning model, strong mentoring, and placement assistance. Students are given structured guidance, hands-on projects, and communication training to ensure they are industry-ready at the end of the programme.

4. What career opportunities exist after completing the course?

Graduates of this data scientist training programme in Hyderabad can pursue careers as data scientists, AI engineers, machine learning specialists, and generative AI consultants. The demand for professionals with agentic AI expertise is rapidly increasing in industries such as finance, healthcare, and e-commerce.

5. Does the course include any practical projects?

Yes, White Scholars’ data science course in Hyderabad is designed around practical, hands-on projects. Learners work with real-world datasets, develop AI models, and design agentic systems that simulate business scenarios. This ensures they gain practical exposure and confidence to apply their skills in professional settings